In this article we will learn about fundamentals of neural network. we will see how a neural network is structured, what are the components of a neuron, its training and optimization. This article aims to help anyone who is from either technical or non technical background, understand what the fundamental block of any AI is and how it works. we have compared the working and making of a neural network with making a cake. I hope this article resolves simple doubts of any one who wants to know about AI and ML.

Introduction

Imagine you’re in a crowded room trying to pick out individual faces or listening to someone speak many different languages. Easy for humans, not so easy for machines. Neural networks, inspired by the human brain, have revolutionized artificial intelligence. This guide is designed for both technical experts and newcomers, using analogies from daily life to make these complex concepts easier to grasp.

Fundamentals of Neural Networks

Biological Inspiration

Neural networks are founded on the human brain’s theory, comprising billions of interconnected neurons. These neurons communicate through electrical and chemical signals, enabling various cognitive functions. Visualize your brain as a network of neurons that let you think, learn, and remember. Similarly, artificial neural networks consist of artificial neurons (nodes) connected in layers to mimic brain processing and decision-making.

Structure of a Neural Network

A neural network is built like a multi-layered cake, with each layer serving a unique function:

Input Layer: Imagine the input data as ingredients like flour, sugar, and eggs in a bakery. Artificial neurons receive input in various forms (images, text, numbers), with each neuron representing a feature of the input data.

Hidden Layers: Think of these as bakers mixing ingredients and baking the cake. Hidden layers perform complex transformations on the input data, developing interesting properties. They’re termed “hidden” because they aren’t directly observable from the input or output. Each hidden layer processes the input data with specific transformations.

Output Layer: This is the final cake you see and taste. The output layer presents the final answer, such as a class label (e.g., chocolate or vanilla) or a predicted value (e.g., stock price). The number of neurons in this layer corresponds to the number of possible outcomes.

Neurons and Activation Functions

Every neuron in a neural network processes input from the previous layer and forwards the result to the next layer. This involves two key steps:

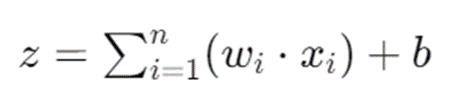

Weighted Sum: Like measuring recipe ingredients in different weights, the neuron multiplies an input by a weight and adds a bias. Mathematically:

Where x are the inputs, w are the weights, and b is the bias.

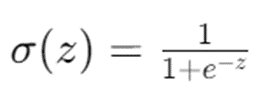

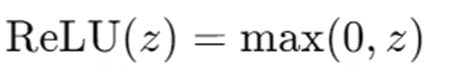

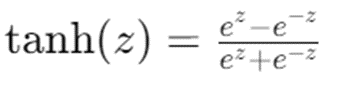

Activation Function: Think of this as the baking process that transforms the raw ingredients into a delicious cake. The activation function introduces non-linearity, allowing the network to learn complex patterns. Common activation functions include:

- Sigmoid: Like slowly rising dough, it squashes input values between 0 and 1.

- ReLU (Rectified Linear Unit): Imagine a cake that can’t burn; it only allows positive values, ignoring any negative ones.

- Tanh (Hyperbolic Tangent): Similar to the sigmoid but squashes values between -1 and 1, like a balanced flavor profile.

Training Neural Networks

Training a neural network is like teaching a child to recognize different animals. It involves showing examples (pictures of animals) and adjusting the understanding (weights and biases) until the child correctly identifies them.

Forward Propagation

This is like showing the child a picture of a cat and asking what animal it is. The input data (picture) passes through the network, layer by layer, until the output (child’s answer) is produced. During forward propagation, the input data is passed through the network layer by layer, and the output is computed. This involves calculating the weighted sums and applying activation functions at each neuron.

Loss Function

The loss function measures how well the network’s prediction matches the actual answer. It’s like a teacher correcting the child’s mistake, explaining that the animal in the picture is a cat, not a dog. The loss function quantifies the difference between the predicted output and the actual output. Common loss functions include:

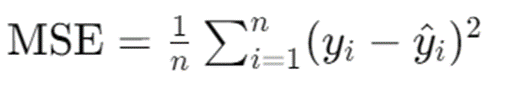

- Mean Squared Error (MSE) for regression tasks:

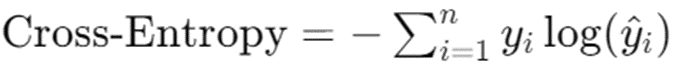

- Cross-Entropy Loss for classification tasks:

Backward Propagation

This is the process of updating the child’s understanding based on the correction. The network adjusts its weights and biases to minimize future mistakes, similar to the child learning from feedback. Backward propagation, or backpropagation, is the process of updating the weights and biases to minimize the loss function. This involves calculating the gradient of the loss function with respect to each weight and bias using the chain rule of calculus. The gradients are then used to update the weights and biases using an optimization algorithm, such as gradient descent.

Optimization Algorithms

These algorithms help fine-tune the network’s learning process, like different teaching methods that make learning more effective. Popular optimization algorithms include:

- Stochastic Gradient Descent (SGD): Teaching the child using one example at a time. The weights and biases are updated based on a single training example or a small batch.

- Adam (Adaptive Moment Estimation): Combining different teaching methods to adapt to the child’s learning pace. The advantages of both SGD and RMSprop are combined while adjusting the learning rate based on the first and second moments of the gradients.

Types of Neural Networks

Just as there are different tools for different jobs, various types of neural networks are suited for specific tasks:

Feedforward Neural Networks (FNNs)

These are like simple recipes where ingredients are mixed in one direction. They’re used for straightforward tasks like classifying emails as spam or not. Feedforward neural networks are the simplest type of neural networks, where the data flows in one direction, from the input layer to the output layer. They are primarily used for tasks such as classification and regression.

Convolutional Neural Networks (CNNs)

Imagine a chef who specializes in intricate cake designs. CNNs are designed for image-related tasks, like recognizing objects in photos. They use layers that focus on different parts of the image, like a chef carefully decorating different sections of a cake. Convolutional neural networks are designed for processing grid-like data, such as images. They use convolutional layers to extract spatial features and pooling layers to reduce dimensionality. CNNs are widely used in image recognition and computer vision applications.

Recurrent Neural Networks (RNNs)

Think of a storyteller who remembers past events to tell a coherent story. RNNs are used for sequential data, such as time series or language translation, maintaining information from previous steps to inform future ones. Recurrent neural networks are suited for sequential data, such as time series or natural language. They have connections that loop back, allowing them to maintain information from previous time steps. Variants of RNNs, such as Long Short-Term Memory (LSTM) and Gated Recurrent Units (GRU), address the limitations of traditional RNNs in capturing long-term dependencies.

Generative Adversarial Networks (GANs)

These are like a friendly competition between two bakers—one creates cakes (generator) and the other judges their quality (discriminator). GANs are used for generating realistic images or data, with the generator trying to create convincing fakes and the discriminator catching them. Generative adversarial networks consist of two neural networks, a generator and a discriminator, that compete against each other. The generator creates fake data, while the discriminator evaluates its authenticity. GANs are used for tasks such as image generation and data augmentation.

Applications of Artificial Neural Networks

Neural networks have countless applications that touch our everyday lives:

Image and Video Recognition

CNNs enable phones to recognize faces in photos and videos, and are used in medical imaging to detect diseases.

Natural Language Processing (NLP): Advanced RNNs enable virtual assistants like Siri or Alexa to understand and respond to voice commands, and are used in language translation apps.

Self-driving Cars: Neural networks help self-driving cars recognize pedestrians, street signs, and other vehicles, enabling real-time decision-making for safe driving.

Medical Applications: Neural networks assist medical professionals in diagnosing diseases from images and predicting patient outcomes, enhancing care quality.

Financial Applications: Neural networks predict stock prices, detect fraudulent transactions, and manage risks, supporting financial institutions’ decision-making.

FAQs

What are neural networks?

Neural networks are computational models inspired by the human brain, consisting of layers of interconnected artificial neurons that process and make decisions based on input data.

How do neural networks learn?

Neural networks learn through a process called training, which involves adjusting weights and biases based on examples, minimizing errors using techniques like backpropagation.

What is the difference between CNN and RNN?

CNNs are designed for image-related tasks, focusing on different image sections, while RNNs handle sequential data, retaining information from previous inputs for tasks like language translation.

What are activation functions in neural networks?

Activation functions introduce non-linearity into the network, enabling it to learn complex patterns. Examples include sigmoid, ReLU, and tanh.

How do neural networks apply to self-driving cars?

Neural networks in self-driving cars recognize and interpret visual data, such as pedestrians and street signs, enabling real-time decision-making for safe navigation.

Why are GANs important?

GANs generate realistic data by pitting two networks against each other—a generator and a discriminator. They are used for tasks like image generation and data augmentation.

Conclusion

Neural networks have transformed the field of artificial intelligence, enabling remarkable advancements across various domains. By understanding their structure, functioning, and applications, we can appreciate the immense potential and challenges of this powerful technology. As research and development continue, the future holds exciting possibilities for further innovations and applications of neural networks in our everyday lives.